The Legal Innovation & Technology Lab's Spot API@ Suffolk Law School - Spot Version: 2022-05-21 (Build 10)

Is Spot Useful?

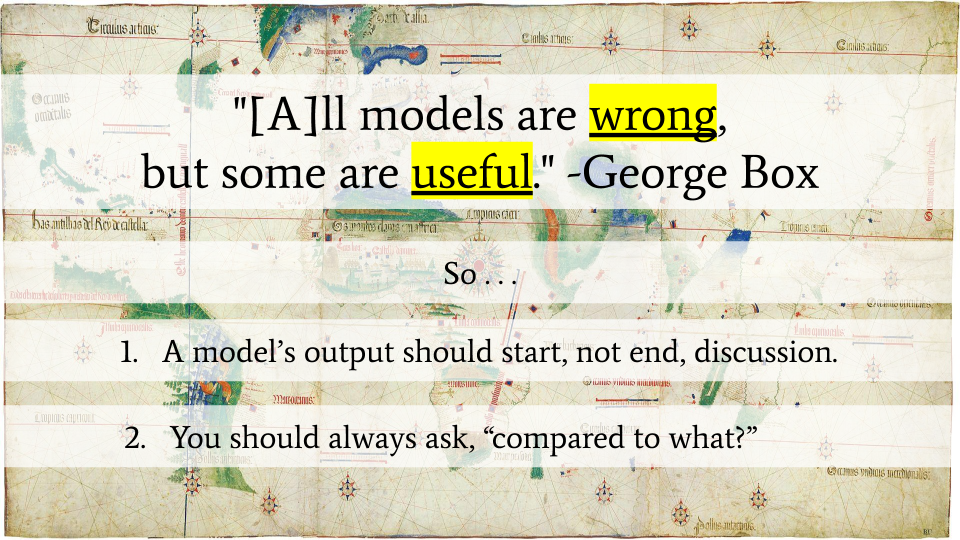

That depends on what you're using it for. Think of Spot like a map. It doesn't have resolution down to the inch, but it can still be helpful. Like a map, Spot is a model, and as the saying goes, "All models are wrong, but some are useful." Because it's "wrong," those using Spot should recognize that its output is not the final word, and if they want to know if it's useful, they'll have to ask "compared to what?" That being said, what you probably want to know is, "how wrong is it?"

Unfortunately, you can't just look at Spot's accuracy. To understand why, let's say you want to evaluate an algorithm that predicts if there will be a snow day tomorrow. I have a algorithm with an accuracy of 98%. Impressive right? What if I told you my algorithm was, "always guess no?" My model is 98% accurate because snow days only happen 2% of the time. To know if my algorithm is any good, you needed to know more. So you might ask what percentage of actual snow days did I "catch?" This is something called recall. The answer is 0%. You could also ask how often am I right when I say something is a snow day. This is something called precision. Since I didn't actually predict any snow days, I can't even calculate this number because I'd have to divide by zero. Either way, these alternative metrics make it clear that my model is no good. And none of that even takes in to account that I may have a preference for false positives over false negatives or vice versa. Consider a screening test for some ailment. The idea is that it's one step in a process, a filter used to identify folks for a diagnostic test (i.e., it's not the final word). In such a case you might care more about minimizing false negatives than you do about false positives. The point is, it's complicated.

You can see detailed performance metrics for each of our active labels by pinging the API's taxonomy method. It returns a json string with details about each label. See https://spot.suffolklitlab.org/v0/taxonomy/

Those performance metrics, however, are the ones you get if you take the model as predicting the presence of a label when it states there is more than a 50% chance of it being there. If you change the API's cutoff, you can favor recall over precision, or the other way around. So API users should think carefully about their use case and what cutoff is appropriate. See Notes on Uncertainty below.

Active Labels

The average weighted† accuracy for our active labels is currently 94.0%. For recall, it's 78.0%, and precision is 76.0%. That "active labels" qualifier limits our model to only those labels for which we can make predictions better than a coin flip or always guessing yes/no, and it only includes the following 120 labels:

- Public Benefits; Disability public benefits; State and local benefits for the disabled; Social Security Disability Insurance (SSDI); Food and Cash benefits... show full list

Remember, you can see every LIST issue and their performance metrics by calling the API's taxonomy method. Only active labels will show performance data. All others will return TBD. See https://spot.suffolklitlab.org/v0/taxonomy/

LIST (formerly NSMIv2)

The following is a list of the parent and first-level child labels Spot is currently learning (click through for the first level of children). Children of children are currently not shown here but can be seen in the list above in Active Labels. Highlighted Labels are active labels (i.e., green labels have F1 >= 0.83, pea green labels have F1 >= 0.63, and yellow labels have F1 >= 0.50). Please note, this is not a complete list of all issues in the world. It represents only the current finalized issues found in LIST. Consequently, do not assume that subtopics are all inclusive. They are just the issues that have been finalized for inclusion in LIST at this point. LIST is in active development, and more issues are added regularly. Note: label descriptions are provided when available.

We're always working to grow our list of active labels and improve the metrics associated with each. If you'd like to help, you can play a few rounds of Learned Hands or share relevant data to help grow our training set.

Notes on Uncertainty

When Spot returns a list of issues, it provides a prediction accompanied by a lower and upper bound for that prediction. See Documentation. The bounds are intended to provide users with information about the uncertainty for a given prediction, allowing them to tailor their results to their use case and tolerance for error. The larger the distance between the lower bound and the prediction, the more likely it is that the prediction is overly optimistic about finding the issue. Conversely, the larger the difference between the prediction and the upper bound, the more likely it is that the prediction is under-estimating the chance that the issue is present. Users are encouraged to consider this when setting the cutoffs for triggering the return of an issue. For example, a user interested in avoiding false negatives may set Spot to return results based on the upper bound being greater than 50%, while one interested in avoiding false positives might choose to base their cutoff on the lower bound. The distance between the lower and upper bounds communicates something about the overall uncertainty around a prediction, with the most certain predictions being those with the smallest distances between the two.

Currently, lower bounds are calculated by multiplying a model's precision for a given label by it's prediction, and upper bounds are calculated by multiplying the prediction for the model's inverse (i.e., the model where positives and negative classifications are swapped) by it's precision and subtracting it from one.

Take Spot for a Test Drive

If you'd like to try Spot without making an account, feel free to take it for a test drive.

† Values are weighted based on the number of affirmative examples for each issue in our dataset.